Research

Network Acceleration and Time Synchronization for Windows Applications

-

Designed a user-mode network stack to reduce the network communication latency and jitter of software programs running on the Microsoft Windows operating system. This solution is implemented entirely in software and runs on commodity computer hardware. Existing applications are transparently redirected to use this custom network stack through the use of replacement dynamic link libraries that intercept and redirect standard networking API calls. A software device driver redirects incoming packets into the user-mode stack, allowing accelerated data (specifically, TCP and UDP for IPv4 and IPv6) to bypass the Windows data path. In addition to the user-mode network stack, a network-based software clock with microsecond resolution at the user-mode software level was also produced. This synchronization solution is compatible with Microsoft Windows and the NTPv4 standard, requires no hardware support, and allows clock synchronization to run over a commodity Ethernet network shared with application data, thereby significantly reducing the cost of deployment. This solution includes a central Windows time service, an API that allows access to this unified time source from all user-mode applications without the time delay of a system call, and a Windows device driver that identifies time synchronization packets in the network stack and bypasses key sources of delay in the operating system.

Hadoop Distributed File System (HDFS)

Hadoop Distributed File System (HDFS)

- Hadoop is a popular open-source implementation of MapReduce for the analysis of large datasets. To manage storage resources across the cluster, Hadoop uses a distributed user-level filesystem. This filesystem - HDFS - is written in Java and designed for portability across heterogeneous hardware and software platforms. This project is addressing a number of bottlenecks in the Hadoop implementation related to tradeoffs between portability and performance, such as implicit assumptions about how the native OS and filesystem underlying Hadoop manage storage resources.

The Axon Network Device

The Axon Network Device

- Datacenter networks must provide low latency, fault tolerance, security, manageability, and scalability. Current hierarchical IP-Ethernet networks achieve many of these benefits but incur significant management and performance overheads.

The Axon network device is being developed to address the shortcomings of hierarchical IP-Ethernet networks. The Axon device implements transparent source routing, making the network appear as a single, large Ethernet segment to connected hosts. Inside the network, however, Axons use source-routing, eliminating Ethernet's barriers to scalability: spanning trees, traffic flooding, and distributed state. The flexibility of source-routing and the simplicity of Ethernet make an Axon network a viable replacement for hierarchical IP-Ethernet networks in the datacenter. Prototypes of the Axon have been implemented on the NetFPGA platform and used to demonstrate the performance and backwards compatibility of source-routed Ethernet. - For more information, please read The Axon Network Device: Prototyping with NetFPGA

RiceNIC: A Reconfigurable and Programmable Gigabit Ethernet Card

RiceNIC: A Reconfigurable and Programmable Gigabit Ethernet Card

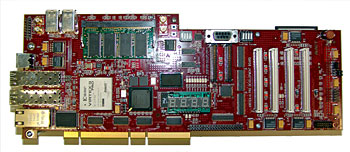

- RiceNIC is a reconfigurable and programmable Gigabit Ethernet network interface card (NIC). It is an open platform meant for research and education into network interface design. The NIC is implemented on a commercial FPGA prototyping board that includes two Xilinx FPGAs, a Gigabit Ethernet interface, a PCI interface, and both SRAM and DRAM memories. The Xilinx Virtex-II Pro FPGA on the board also includes two embedded PowerPC processors. RiceNIC provides significant computation and storage resources that are largely unutilized when performing the basic tasks of a network interface. The remaining processing and storage resources are available to customize the behavior of RiceNIC. This capability and flexibility makes RiceNIC a valuable platform for research and education into current and future network interface architectures.

- For more information, visit: http://www.cs.rice.edu/CS/Architecture/ricenic/

Concurrent Direct Network Access (CDNA) for Virtual Machine Monitors

-

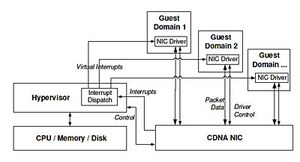

Virtual machine monitors (VMMs) allow multiple virtual machines running on the same physical machine to share hardware resources. To support networking, such VMMs must virtualize the machine’s network interfaces by presenting each virtual machine with a software interface that is multiplexed onto the actual physical NIC. The overhead of this software-based network virtualization severely limits network performance.

Virtual machine monitors (VMMs) allow multiple virtual machines running on the same physical machine to share hardware resources. To support networking, such VMMs must virtualize the machine’s network interfaces by presenting each virtual machine with a software interface that is multiplexed onto the actual physical NIC. The overhead of this software-based network virtualization severely limits network performance.The RiceNIC was used in research with the Xen VMM that eliminated the performance limits of software multiplexing by providing each virtual machine safe direct access to the network interface. To accomplish this, network traffic multiplexing was performed directly on the RiceNIC, rather than in software on the host. This required both hardware and firmware modifications to RiceNIC. The new networking architecture is called Concurrent Direct Network Access (CDNA).

- For more information on CDNA, please read the HPCA '07 publication: Concurrent Direct Network Access for Virtual Machine Monitors